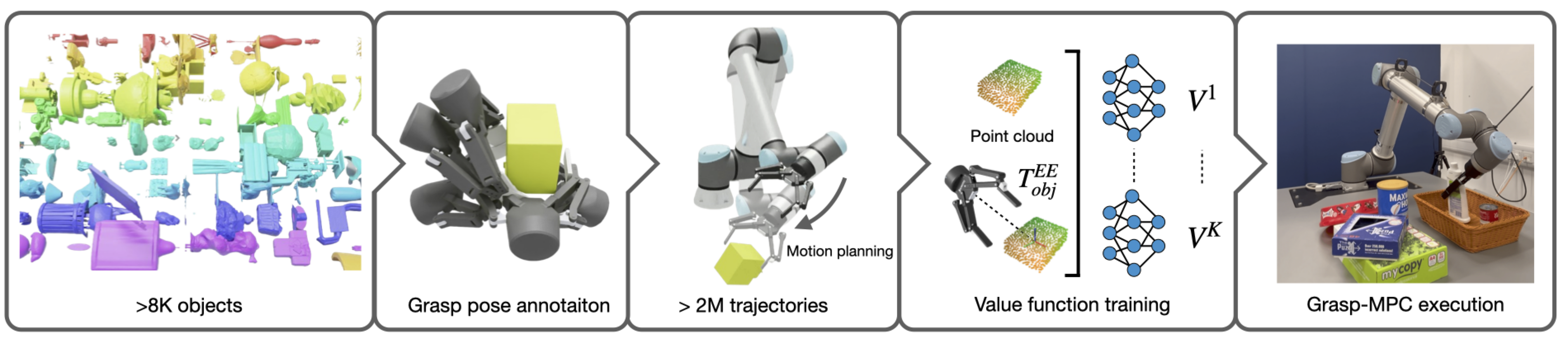

Grasping remains a significant challenge in robotics, particularly in unstructured environments with diverse and novel objects. Open-loop grasping methods, while effective in controlled settings, often struggle in dynamic or cluttered environments due to their inability to adapt to object pose changes or recover from grasp prediction errors. In contrast, state-of-the-art closed-loop methods address some of these challenges but are often restricted to simplified settings, lack robustness in safely executing grasps in cluttered scenes, and exhibit poor generalization to novel objects. To address these, we propose Grasp-MPC, a closed-loop 6-DoF vision-based grasping policy designed for robust and reactive grasping for novel objects in cluttered environments. Grasp-MPC incorporates a value function, trained on visual observations from a large-scale synthetic dataset of 2 million grasp trajectories, including both successful and failed attempts, into a MPC framework. The value function serves as a cost term, combined with collision and minimum jerk costs, enabling real-time, safe, and adaptive grasp execution. To initiate each grasp attempt, Grasp-MPC leverages an off-the-shelf grasp prediction model to move the gripper to a pre-grasp pose, from which it executes closed-loop control for grasping. As a closed-loop policy, Grasp-MPC can dynamically react to changes in object pose, a capability validated in real-world experiments. Grasp-MPC is extensively evaluated on FetchBench and real-world settings, including tabletop, cluttered tabletop, and shelf scenarios, where it significantly outperforms baselines in grasp success and generalizes well to novel objects.